Music Audio Similarity: A Signal Processing-based Shazam-like project

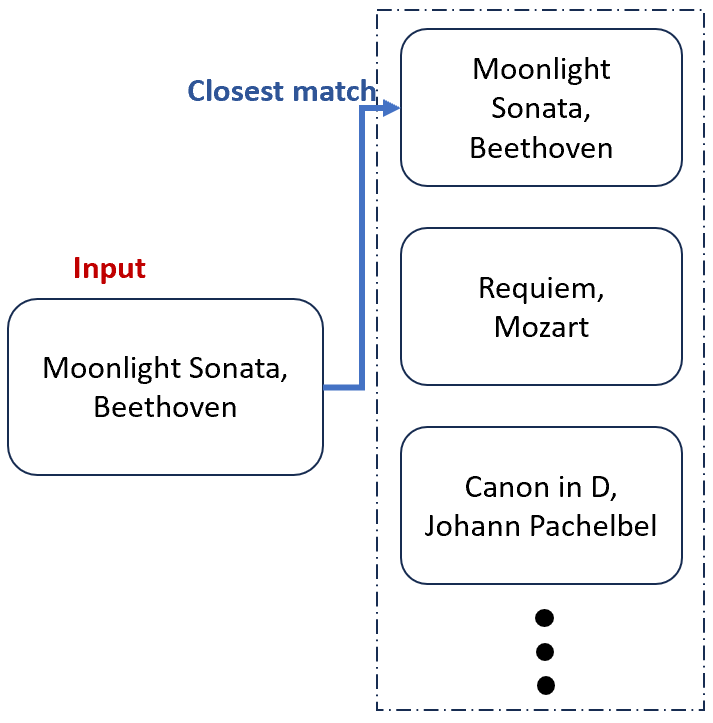

This was a collaboration with my classmate Dhawal Modi from our signal processing class at UC Merced. I wanted to work on an audio project given my interest in music production. With the Shazam app as our motivation, we worked on a project to figure out how to match a classical music recording, played on just one instrument, to its closest match in a dataset of orchestral music. The idea was to use signal processing techniques instead of relying on machine learning so that it aligned closely to concepts we learnt in our class.

We started with the MusicNet dataset, which has orchestral recordings and their digital "sheet music" in the form of MIDI files. We used the MIDI files for generating our test audio samples, where we recreated the same pieces using single instruments like piano or violin. Then came the challenge: how do you measure similarity between these recordings, especially when tempos or instruments differ? To solve this, we applied a few signal processing techniques to extract the musical essence of the recordings and compare them.

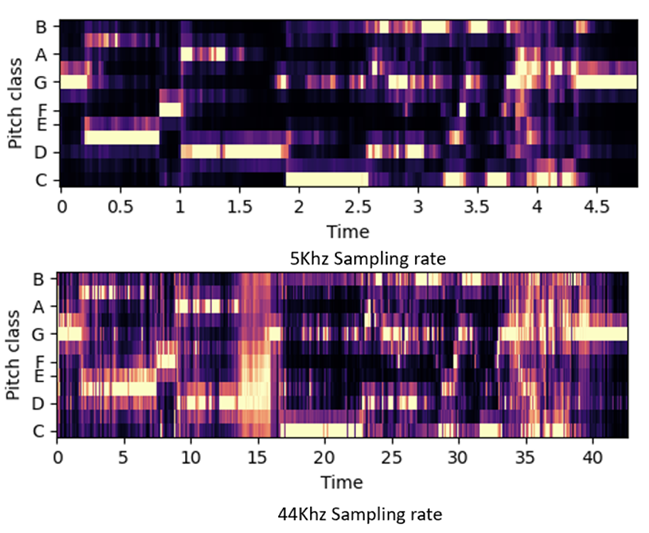

First, we used chromagrams instead of spectrograms. A spectrogram breaks audio into its full range of frequencies over time, which can be too detailed and vary greatly across instruments. Chromagrams, on the other hand, simplify this by mapping audio into 12 bins, each corresponding to a musical note (like Do, Re, Mi). This way, regardless of whether the music is played on a piano or violin, regardless of the octave the audio was played in, the same sequence of notes results in a similar chromagram. This made it much easier to focus on the musical structure instead of the instrument's unique sound.

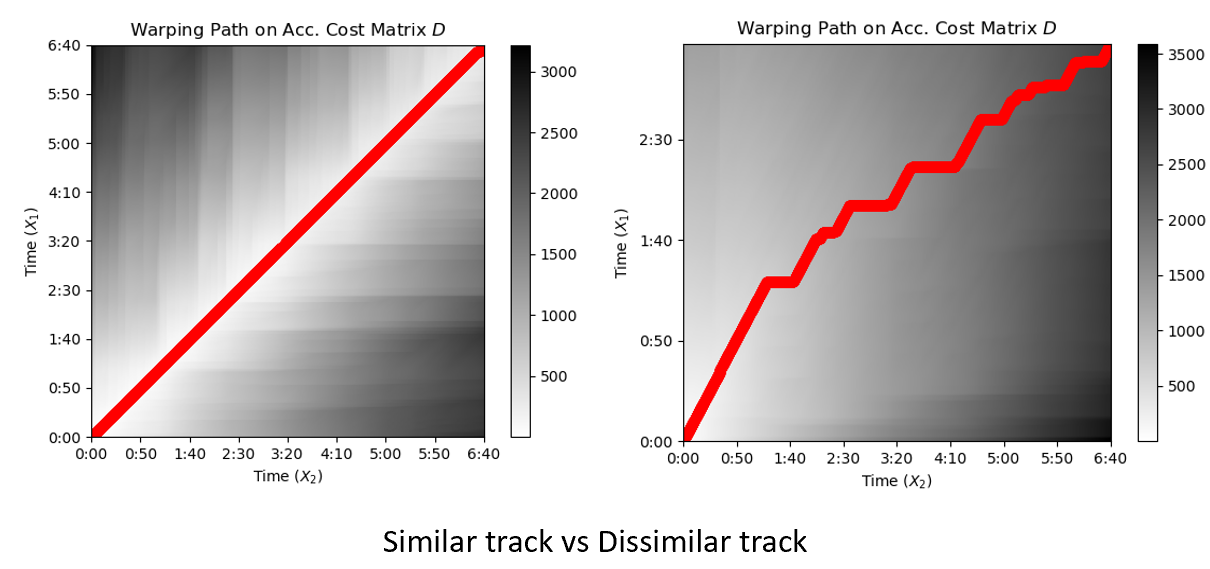

To compare tracks, we used Dynamic Time Warping (DTW), a technique for measuring similarity between two time-dependent sequences. DTW aligns chromagrams by finding the best "cost path" between them, even if the tracks are played at slightly different tempos or have timing inconsistencies. Essentially, DTW computes a similarity score by taking a dot product of the aligned chromagrams and then calculating the cumulative cost of the best alignment path. This made it possible to handle variations in tempo or small timing differences, which are common in musical recordings.

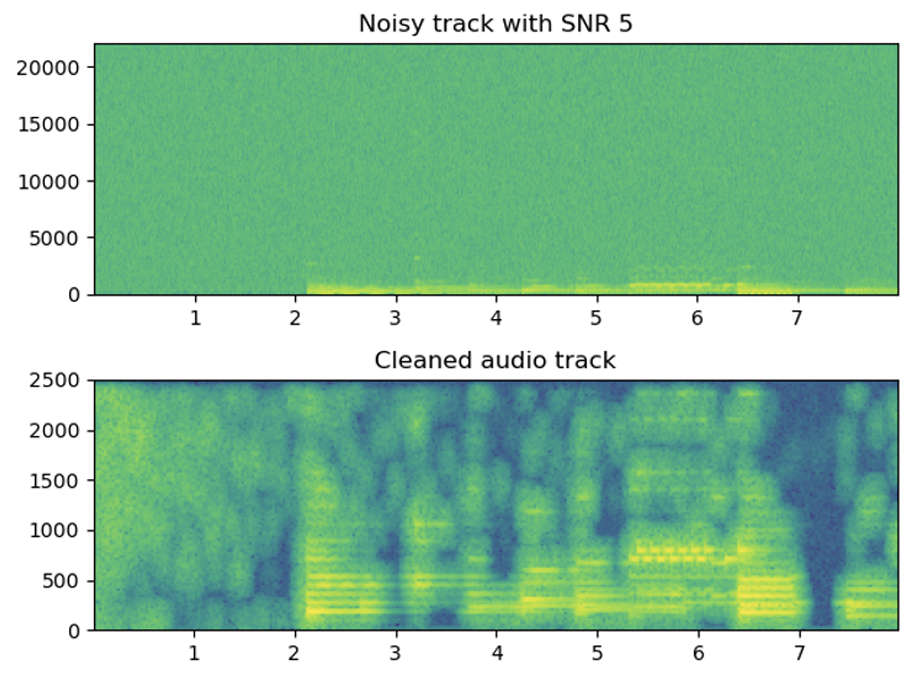

Lastly, we addressed the issue of noise. Many recordings include background sounds or distortions, so we applied spectral gating to clean them up. This method filters out unwanted noise by isolating the frequencies corresponding to the music while suppressing others, ensuring that our analysis focused only on the relevant audio.

In the end, we managed to achieve about 77.5% accuracy in matching tracks under the best conditions. It was satisfying to see our work handle tempo differences and even noisy recordings.