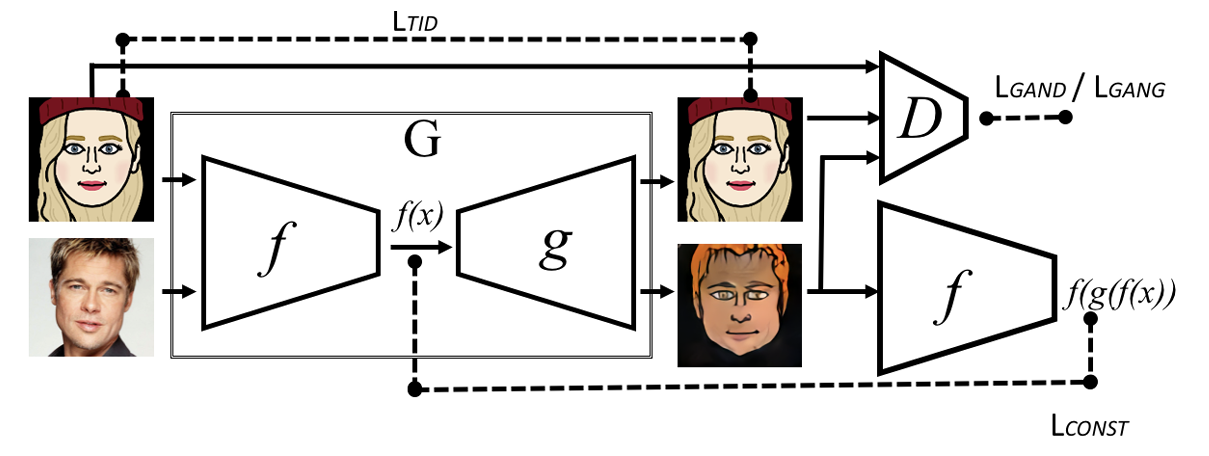

Face to bitmoji using Domain Transfer Network (DTN)

Summary

Along with my ex-colleague Deepak from Neva Innovation Labs, I implemented Domain Transfer Network (DTN) in Keras to transfer images between domains, specifically from SVHN digits to MNIST and from CelebA face images to Bitmoji avatars. Our goal was to replicate the original paper's approach (by Facebook AI Research - FAIR), which uses a pre-trained feature encoder and a GAN to generate images in a target domain.

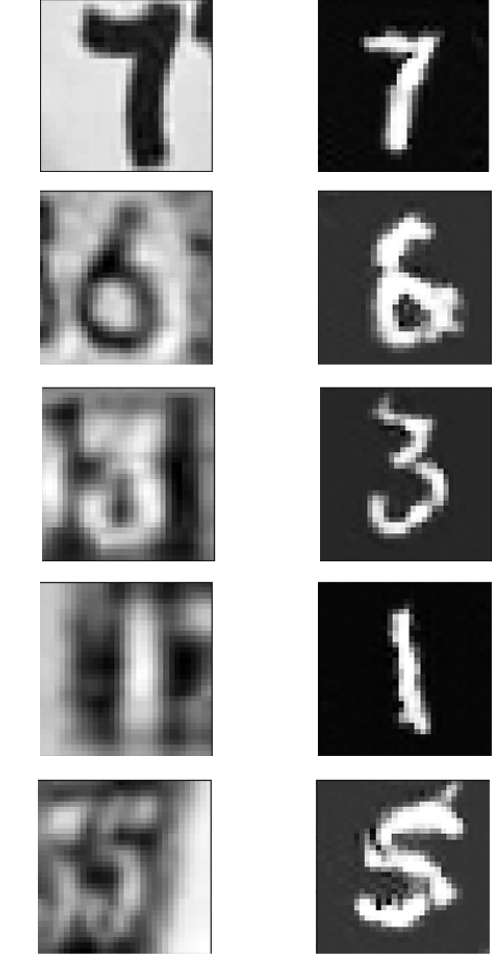

Digit Transfer Results

For the digit transfer, we achieved visually indistinguishable results from MNIST. To evaluate the model, the generated SVHN images were tested on an MNIST classifier, which was trained to have a test accuracy of 99.3%. The generated MNIST images from both the training and test sets of SVHN passed through the classifier with accuracies of 86.3% and 85.9%, respectively, which are slightly lower than the results in the original paper but still demonstrate strong performance.

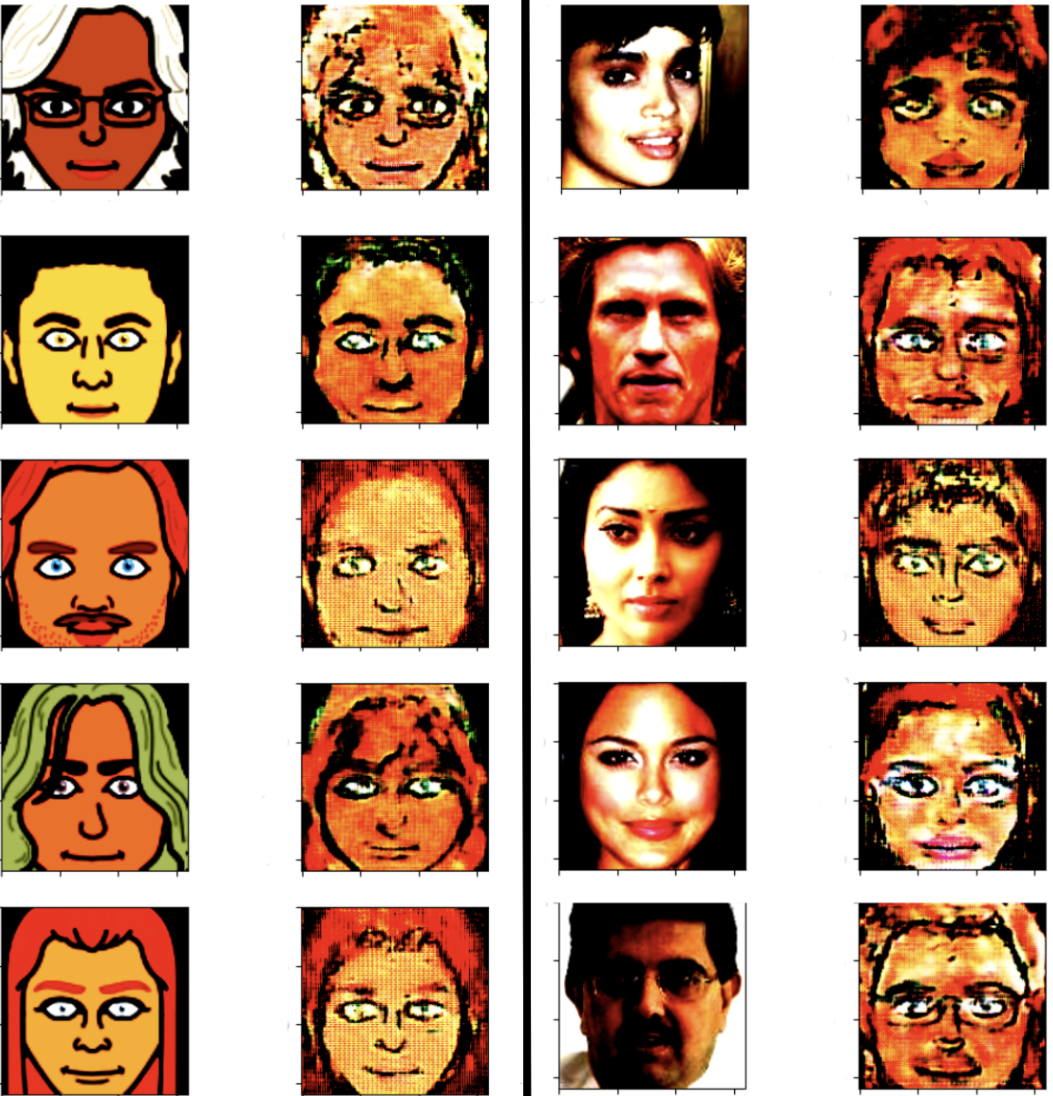

Face-to-Bitmoji Transfer

For face-to-Bitmoji transfer, we used Google's Facenet as the encoder instead of Facebook’s Deepface, since the original paper's Deepface model was not publicly available. Despite these adjustments, we encountered challenges in transferring high-quality features. While facial expressions like smiles transferred well, other attributes such as hair and skin color were less successfully mapped to the Bitmoji avatars.

Challenges and Solutions

The main challenges in our work were accurate implementation of DTN in Keras with custom loss functions, and training the GAN model. Despite the difficulties, our Keras implementation successfully replicated the DTN method, showing its applicability for cross-domain image generation.